Kodiak Vision: Turning data into insight

Self-driving vehicles depend on a wide range of sensors — cameras, radar, lidar, and (occasionally) others — to see the environment around them. Lidar, which bounces short laser bursts off objects to create 3D representations of its surroundings, has received the most attention, both due to its usefulness and to Tesla’s well-publicized aversion to its use. But in the last few years we have seen impressive innovation in cameras and radar for self-driving vehicles as well. Whereas a decade ago, a self-driving startup would have had to develop a lot of this technology in-house, we are fortunate at Kodiak AI to have had access to an incredibly deep market from day one.

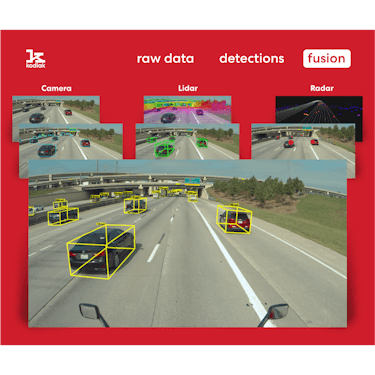

Powerful as all these sensors are, they can only collect raw data: they do not by themselves produce actionable information that the Kodiak Driver uses to make decisions about how and where to drive. The Kodiak Driver’s perception layer processes the data produced by the sensors to create an understanding of the world around the vehicle. We call our perception system Kodiak Vision.

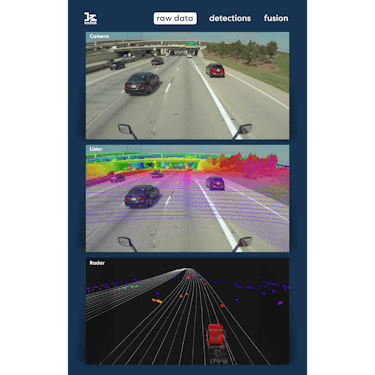

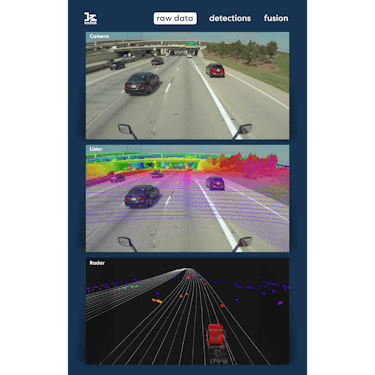

The Kodiak Driver’s sensor suite collects raw data about the world around the truck

Perception is an ongoing optimization problem. You need to decide what conclusions best explain the data you have, how likely each of those conclusions is to be accurate, and how you can improve those conclusions with each new sensor reading.

Self-driving systems typically answer these questions by processing sensor readings through detectors: software tools that identify where potential objects like cars and road signs are, and what they might be. Multiple detectors may process the same data in different ways to further ensure that the perception system correctly locates and classifies each object. Combining all this information to create a coherent view of the vehicle’s surroundings is known in the industry as sensor fusion.

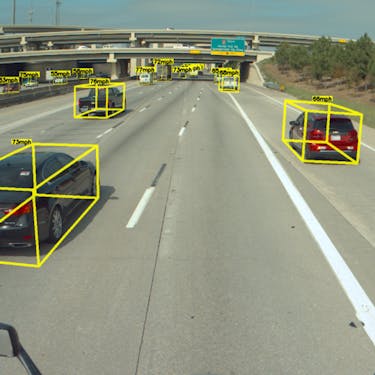

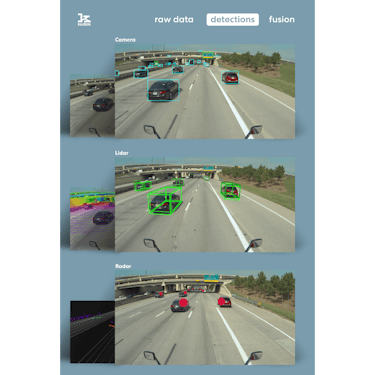

Detectors process raw data to locate and classify objects

Needless to say, meaningfully fusing the outputs of multiple sensors and dozens of detectors is a tremendous challenge. When faced with such a challenge, the instinct can sometimes be to try to find clever rules, or heuristics, to simplify the problem. You may know that these heuristics are imperfect, but they allow you to progress pretty quickly, and you figure you can take care of the really hard issues, or edge cases, later. Investors are inevitably impressed when they take their first ride and the self-driving system seems to work well after only a few months of work.

Take a common example. Today’s sensors are very powerful, but of course they don’t have Superman’s X-ray vision. This means they see a lot of occluded vehicles, vehicles partially hidden behind other vehicles. So what do you do when you see the front quarter of a truck? You can’t ignore it; that would be foolish and dangerous when you have such strong evidence that the truck exists. So you assume that the truck exists. But what do you know about the truck’s dimensions? An obvious heuristic to apply is to assume that it has a standard length and width. In the short run, this kind of rules-based heuristic works well. Perhaps a little too well. Right off the bat, you can train your system to react as if there were another car behind the car in front, which improves performance.

But this kind of heuristic has significant long-run limitations. Even though there’s logic behind the rule, a lot of useful information can get lost in the shuffle. First, you might lose your sense of how certain you are of the occluded vehicle’s location and shape. Plus, the universe has a way of finding holes in your clever heuristics, thus necessitating ever more special cases. For example, what do you do if the occluded vehicle turns out to be hauling an extra-long wind turbine blade down the highway? You’re in trouble right when you need your system to perform at its best.

The Kodiak Driver encounters a truck hauling a wind turbine blade

So what do you do? You write another heuristic to cover this special case. Soon, almost all of your code is devoted to finding ways to deal with exceptions. The rules become extremely complex, and therefore very difficult to modify. With so many overlapping rules, you don’t have a clean way to add a new detector, let alone a new sensor, without breaking all your rules and rewriting a sizable portion of your perception code. By the time you realize your mistake, you have a choice to make: come up with another heuristic, or tell your investors, “sorry, you can’t get a test drive this quarter because we need to completely rewrite our perception code.” Guess which option most people choose.

We’ve designed Kodiak Vision to be different. Over the past decade, we’ve seen rules-based approaches fail again and again. Our experience taught us not to rely too much on the latest technological breakthrough or innovation. Instead, we went back to basics. We’ve adopted a disciplined approach, rooted in rigorous fundamental theory, with a strong commitment to avoiding rules, heuristics, and shortcuts. We rigorously apply what information theory and state-estimation pioneers like Shannon, Wiener, Kolmogorov, and Chapman tell us about how to track multiple objects in the same scene, building on principles originally developed decades ago. These principles aren’t secrets: there are whole textbooks devoted to them, and they are foundational for many complex modern technologies such as missile defense systems and GPS. But it’s rare for autonomous vehicle companies to explicitly enforce their use throughout the codebase.

Take the example of the occluded vehicle. Kodiak Vision aims to correctly frame the uncertainty. We know that there’s one vehicle hidden behind another, and we know the precise location of the vehicle surface closest to us. Instead of assuming we know the precise dimensions of the vehicle, we instead make an honest assessment consistent with the data and calculate our (probably high) level of uncertainty. That is OK! We know what we know, we know what we don’t know, and we are honest about it. We can roll with the uncertainty as long as we correctly manage it. This approach has a huge benefit: it is true and correct, at peace with the universe.

Relying on fundamental mathematics instead of clever software tricks takes discipline. Nobody sets out to create a perception system that’s a spaghetti tangle of overlapping rules, but it happens when you’re under pressure to show results quickly. It also cuts against the predominant culture of software development, which frequently values clever solutions that work for the moment over solutions rooted in theory. Our fundamentals-based approach certainly caused the Kodiak Driver to improve more slowly at first, which at times was painful. But it also means that our system is more robust to whatever the universe may throw at it. And now that we’re 18 months into on-road testing, Kodiak Vision’s solid foundation has allowed us to make incredibly rapid progress.

Central to our fundamentals-based approach is our sensor fusion solution, which we call the Kodiak Vision Tracker. The Tracker is responsible for fusing every detection from every sensor, then combining them over time to track every object on the road. Essentially, the Tracker defines a common interface for detectors based on the fundamental mathematics of information theory. It allows detectors both to describe what they’re seeing and honestly assess how certain they are about what they see, taking into account the physical properties and peculiarities of each specific sensor. The Tracker isn’t an ad hoc solution to one problem or another. It applies to every detector and every detection. The Tracker decouples detections from tracking. It does not have access to raw data about the outside world, but instead only sees the detectors’ outputs — object detections, ranges, velocities, accelerations, vehicle boxes, and other pieces of information.

The Kodiak Vision Tracker fuses the products of every detector

Take the example of the occluded vehicle. A detector can tell the Tracker, “I’m very certain this partial vehicle I see is a bus” or, “I see something here; it looks like the hood of a car, but I’m not especially confident.” Over time, the Tracker lets us build a rich description of what’s happening around the truck, filling in the details, sensor reading by sensor reading. Twenty times a second, the Tracker looks at the new information it receives from the truck’s detectors and uses that information to refine its hypotheses about what it sees around the truck. It then assesses what conclusion best explains the whole body of evidence, without prejudice, rules, or hard-coding. The Tracker then determines how likely that conclusion is, identifies likely alternate possibilities, and submits a detailed description of the world to the truck’s self-driving system.

Basing Kodiak Vision in such fundamental mathematics gives us tremendous flexibility. We can interchange Kodiak Vision’s components, work on detectors independently, and easily fuse in new detectors. Since all our detectors speak the same language, the Tracker can automatically assimilate the new information from new detectors without requiring any new code. The same logic applies to new sensors. Since our code is not tied to the idiosyncrasies of specific sensor makes and models, we can easily adopt the latest technology as it becomes available. This gives us a tremendous advantage in the rapidly evolving self-driving industry.

The Tracker also allows us to better manage the uncertainty inherent in driving. When the Tracker is uncertain about what or where an object is, it can flag it for extra scrutiny or instruct the vehicle to give the object additional space, just as a human would. Lastly, the same flexibility that allows us to easily add sensors applies to taking them away: if a sensor malfunctions, the Kodiak Driver won’t go blind. This gives our trucks additional redundancy and robustness; if we lose a sensor, the Kodiak Driver will take the safest and most appropriate action using the remaining sensors. This is a critical safety capability as we approach deployment.

The Kodiak Vision Tracker even allows us to update individual detectors, improving their performance over time. At the end of every drive, we can analyze every detector and say, “During the last run, you were systematically biased by a few centimeters; please adjust your measurements accordingly” or, “you were more correct today, so tomorrow I will pay more attention to you.” As we approach deployment, we will work to add the ability to do these corrections in real-time, on the road. This will allow us to not only reduce the overall level of uncertainty in our perception system, but also to understand the characteristic biases and uncertainty signatures of each sensor and detector.

There’s tremendous pressure in Silicon Valley to implement what’s new. Claims like, “We use the latest neural net!” or “Our amazing new camera sees over half a mile and solves all of our sensor problems!” can be very convincing. Kodiak has focused on implementing what’s right, using the latest technology thoughtfully, instead of as a crutch. In fact, our fundamentals-based approach has given us the foundation we need to rapidly integrate revolutions in deep learning or computer vision without rewriting our codebase. It’s amazing to see such rapid progress, and even more amazing to see how we got here, by following fundamental principles.

Safe and sound journeys!