Taking self-driving trucks to new dimensions with 4D radar

|

As a hardware engineer at Kodiak Robotics, I have seen first-hand the unique challenges of developing self-driving trucking technology. While the differences between cars and trucks may seem superficial, they influence every aspect of a self-driving vehicle stack — from hardware design to software requirements.

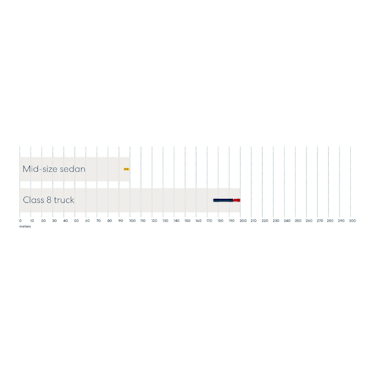

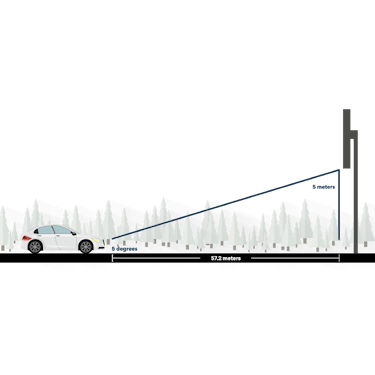

Take the most obvious difference between cars and trucks: their vastly different sizes. A typical passenger sedan weighs 3,000–4,000 pounds, and even large SUVs top out at approximately 6,000 pounds. Given their size and weight, a typical passenger car can stop from 60mph in as little as 40 meters. In contrast, a fully-loaded heavy-duty truck can weigh up to 80,000 pounds. Such big, heavy vehicles are much harder to maneuver: a heavy-duty truck traveling at 60mph requires at least 75 meters to stop. While today’s braking systems can stop that quickly if necessary, a more comfortable braking distance for a loaded tractor and trailer is 200 meters — more than two football fields. These different stopping distances mean that while an autonomous car can operate safely with 100 meters of vision, a heavy duty truck needs to perceive obstacles in the road at 200 meters or more.

Comfortable braking distance in meters at 60mph

Perception is the process of taking numerous sensor readings — from cameras, radars, LiDARs, and others — to build a rich representative model of the world around the vehicle. Over the past decade, most self-driving systems have used LiDAR as their primary sensor, while layering-in other sensors to add additional nuance. For example, many systems may use cameras to detect brake lights, and radar to compensate for LiDAR’s lower effective range in the rain.

In Kodiak Vision: Turning data into Insight, we discussed how we have designed the Kodiak Driver’s perception system, Kodiak Vision, to account for the unique long-range perception needs of self-driving trucks. Instead of relying primarily on LiDAR and then working around its limitations, Kodiak Vision treats all its sensors as primary by creating a shared language between different sensor types. This shared language allows each sensor to describe what it sees in a manner that accounts for the physical properties and peculiarities of each specific sensor, and enables the Kodiak Driver to maximize performance by taking advantage of each sensor’s unique properties. This common language also allows us to seamlessly and rapidly integrate new sensors and technologies into the Kodiak Driver.

It is this flexibility that allowed us to team up with ZF Group to help test and deploy their groundbreaking ZF Full-Range Radar technology. We are incredibly excited to make the ZF sensor our primary long range radar for the Kodiak Driver. We see this radar technology as critical to helping us bring self-driving trucks to market at scale. The addition of the ZF Full-Range Radar sensor allows our perception system to accurately track vehicle velocity at a range of up to 350 meters, even in harsh weather conditions, and when integrated with additional sensors in our perception system we can build a highly precise and expansive model of the world around our self-driving trucks.

Groundbreaking 4D Radar To Power Self-Driving Trucks

Traditional radar technology tracks objects in three dimensions, able to return an object’s horizontal position, distance, and velocity. Note that an object’s vertical position is not on this list: traditional automotive radar has difficulty distinguishing overhead objects, such as road signs and bridges, from road hazards like stopped vehicles. In other words, traditional radar is almost like seeing the world from the top down. Automotive AEB (automatic emergency braking systems) typically use bumper mounted traditional radars with a small vertical field of view that cannot detect overhead signs for 50m in front of the vehicle. The AEB system can then classify potential collisions and come to a full stop within the 50m limit. Due to increased stopping distance, self-driving trucks need a radar that is accurate at very long ranges.

The ZF Full-Range Radar has the ability to measure that critical fourth dimension, an object’s vertical position, that a traditional radar cannot, while maintaining its accuracy to a range of 300m or more.

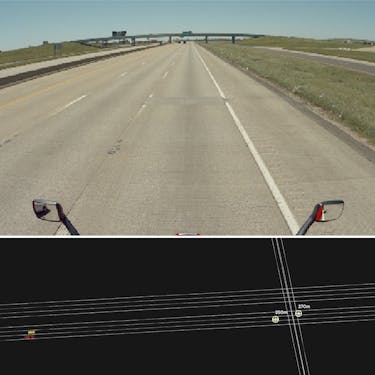

Traditional radars rely on a single Monolithic Microwave Integrated Circuit (MMIC), while in the ZF Full-Range Radar four MMICs are cascaded resulting in a total of 192 channels that provide highly accurate measurements of azimuth, elevation, range, and doppler. The long-range elevation (vertical position) measurement helps the Kodiak Driver resolve complex perception scenarios such as a stopped vehicle under a bridge, or a disabled vehicle on the shoulder under a road sign.

Kodiak Vision tracking vehicles underneath an overpass 270m in front of the truck.

Inclement Weather and The Science of Perception

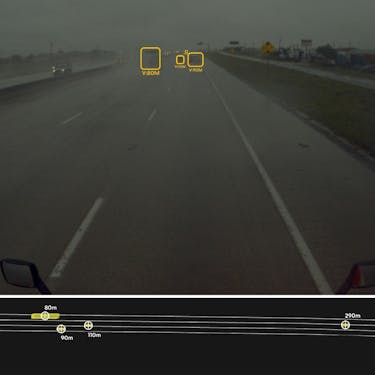

The ZF Full-Range Radar does more than provide long-range perception information to the Kodiak Driver — it’s also critical to our ability to operate 24/7, no matter the weather. The visible spectrum, i.e. the light humans can detect with our eyes, has wavelengths between 400 nanometers to 700 nanometers. Our brains perceive different wavelengths within that range as being different colors. Fog particles (~1000 nanometers) and rain droplets (1–2 millimeters) are many times larger than the wavelength of visible light, so they absorb and reflect the light energy, making it difficult to see through it.

This is especially evident at longer ranges as vehicles may seem to disappear as they get further away in a heavy rain storm. Typical LiDAR systems operate with wavelengths of 995 nanometers and 1500 nanometers. These longer wavelengths improve visibility in fog, but not to the range necessary for heavy duty trucks at highway speeds.

Radar, on the other hand, has a wavelength of ~4 millimeters, allowing it to pass through fog and rain with minimal power loss. This allows it to cut through inclement weather, and operate in difficult conditions where camera and lidar range may be limited. Additionally, radar, unlike LiDAR, does not present an eye safety risk, and so a higher amount of energy can be transmitted without being a danger to humans. This all-weather capability makes the ZF Full-Range Radar critical to making 24/7 operations a reality for Kodiak. Furthermore, the Kodiak Vision system and its flexible fusion tracker make sure that radar information is not only relied upon in such harsh weather conditions — which would require detecting these conditions and changing the perception approach — but instead to use radar as a primary input to our scene inference all the time.

Kodiak Vision tracking vehicles in the rain 290m in front of the truck.

Creating A Safe & Reliable Autonomous System

At the end of the day, driverless systems need to be at least as safe as human-operated vehicles, and that’s the standard that we hold ourselves to. By working directly with ZF, we are able to fine tune next-generation sensors designed to meet the stringent safety requirements and grueling demands of long-haul trucking. We’re excited to be working with ZF to further advance this system and to integrate it into our production-ready trucks.